Publications

* indicates equal contribution.

MS in Robotics | University of Michigan

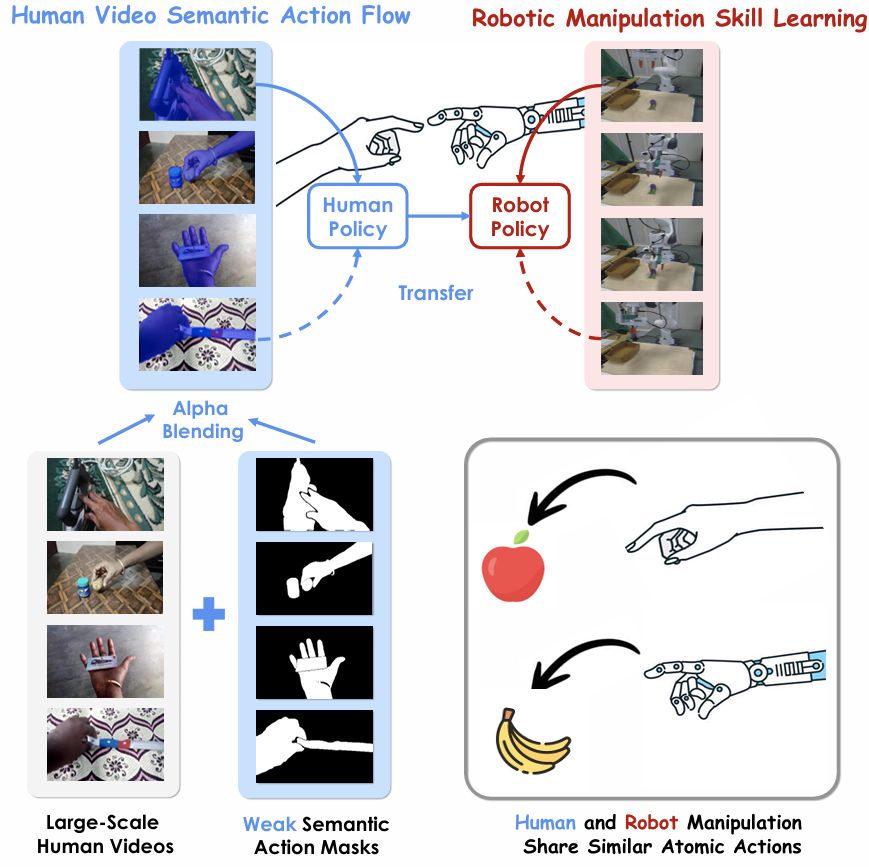

Changhe Chen is a master's student in Robotics at the University of Michigan, (advised by Prof. Nima Fazeli and Prof. Xiaonan (Sean) Huang). Prior to joining the University of Michigan, Changhe received his BASc in Electrical Engineering from the University of Toronto, where he worked with Prof. Chan Carusone on reinforcement learning for analog circuit design. During his undergraduate studies, he also interned as a research assistant at Huawei's Noah's Ark Lab, developing multi-agent reinforcement learning platforms and advanced trajectory prediction models for autonomous driving.In summer 2025, Changhe enjoyed collaborating on robotics research and real-world robotic systems with Heng Yang's group at Harvard University. Changhe's current research focuses on embodied AI, data-efficient robot learning, and learning from humans, with an emphasis on developing multi-modal visual language models and instruction-based semantic segmentation to enable robots to better understand and execute complex tasks.

* indicates equal contribution.

Investigated how multi-task training under shared scenes shapes latent-space clustering and task interference in visual representations.

Analyzed latent phase transitions across subgoals in long-horizon tasks to uncover implicit structure and dynamics within encoder representations.

Proposed scene alignment and task grouping strategies to improve representation consistency and generalization across varied environments.

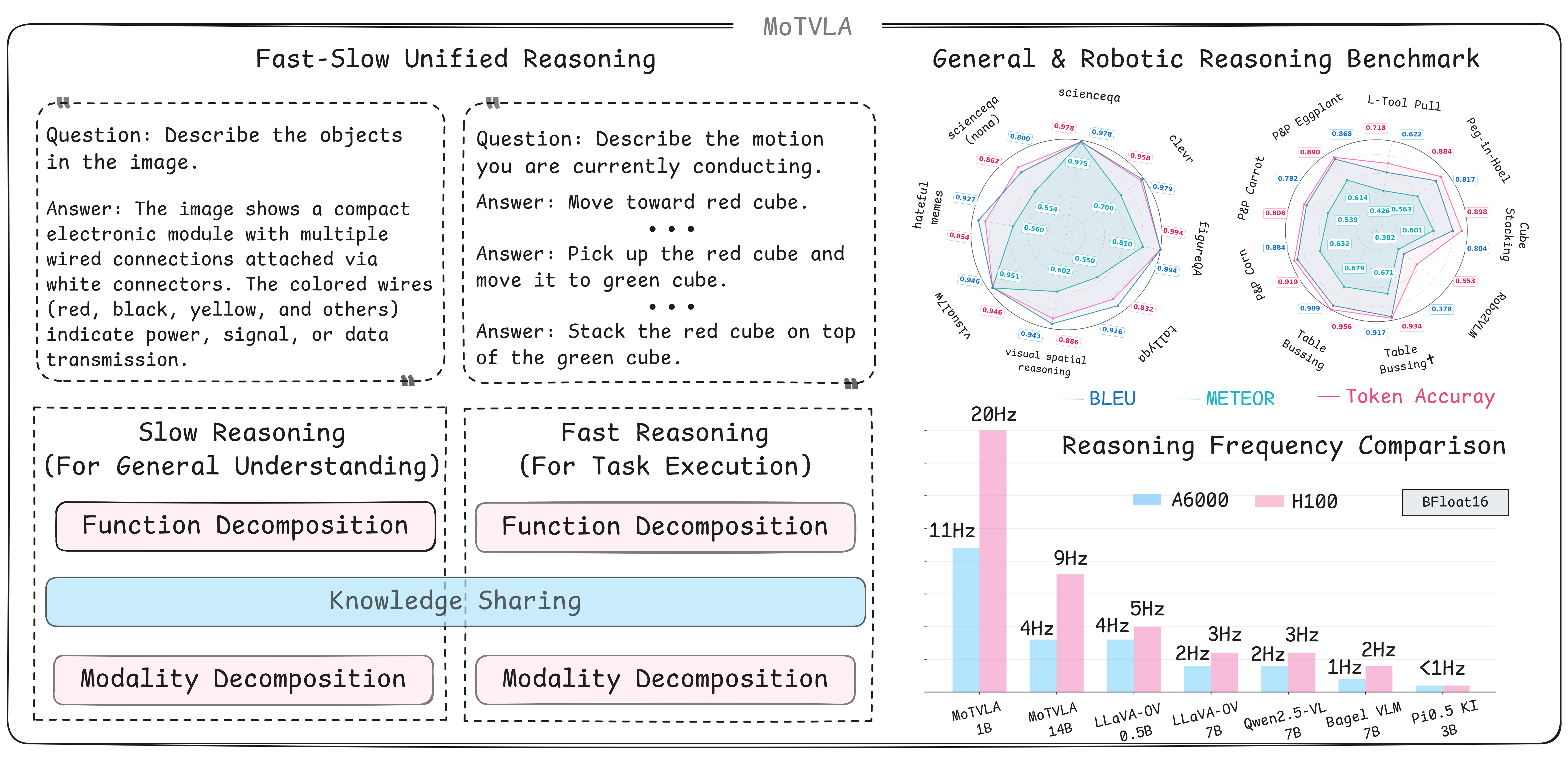

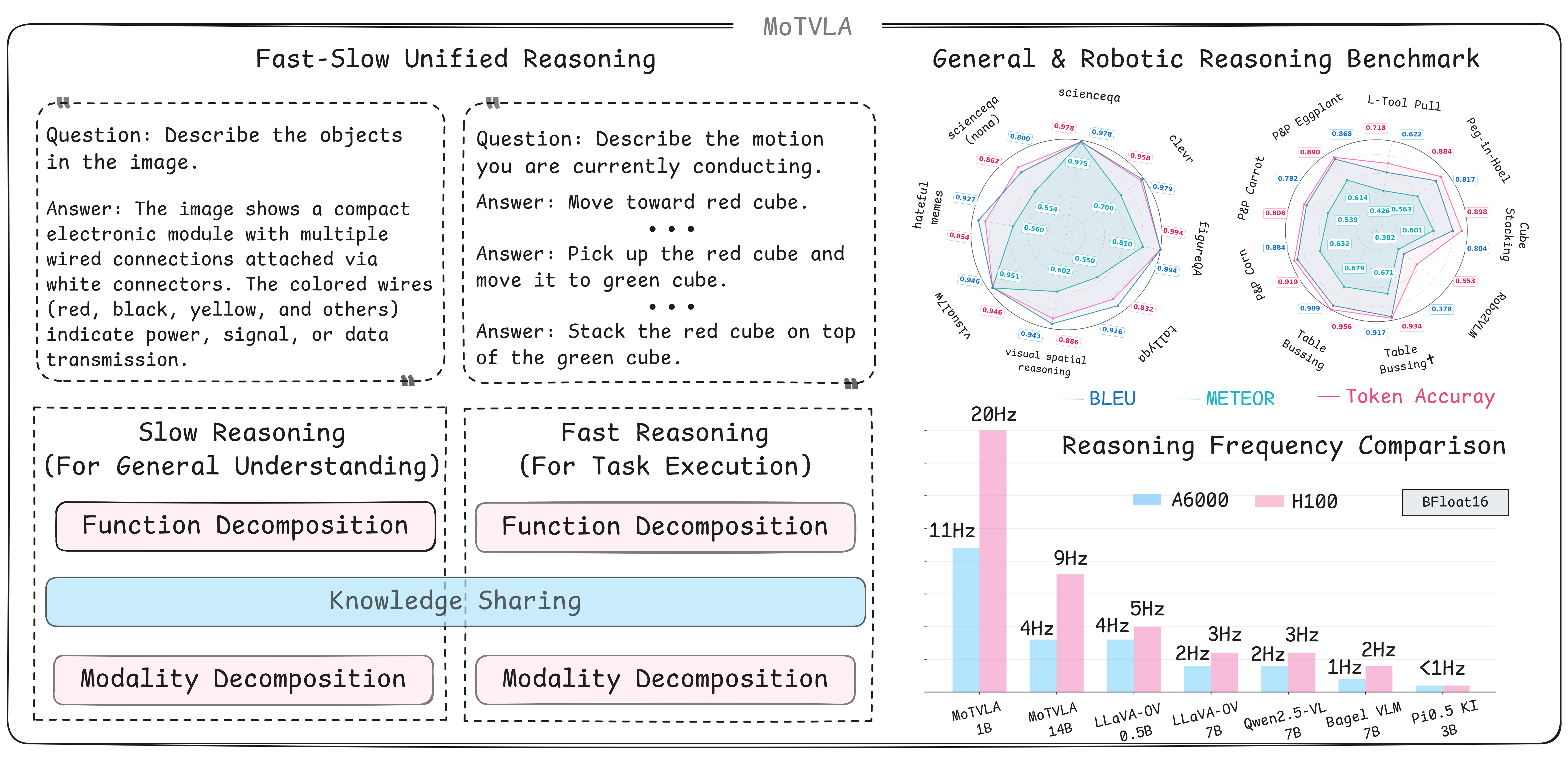

Designed MoTVLA, a mixture-of-transformers framework unifying generalist vision-language reasoning with fast motion decomposition for robotic manipulation.

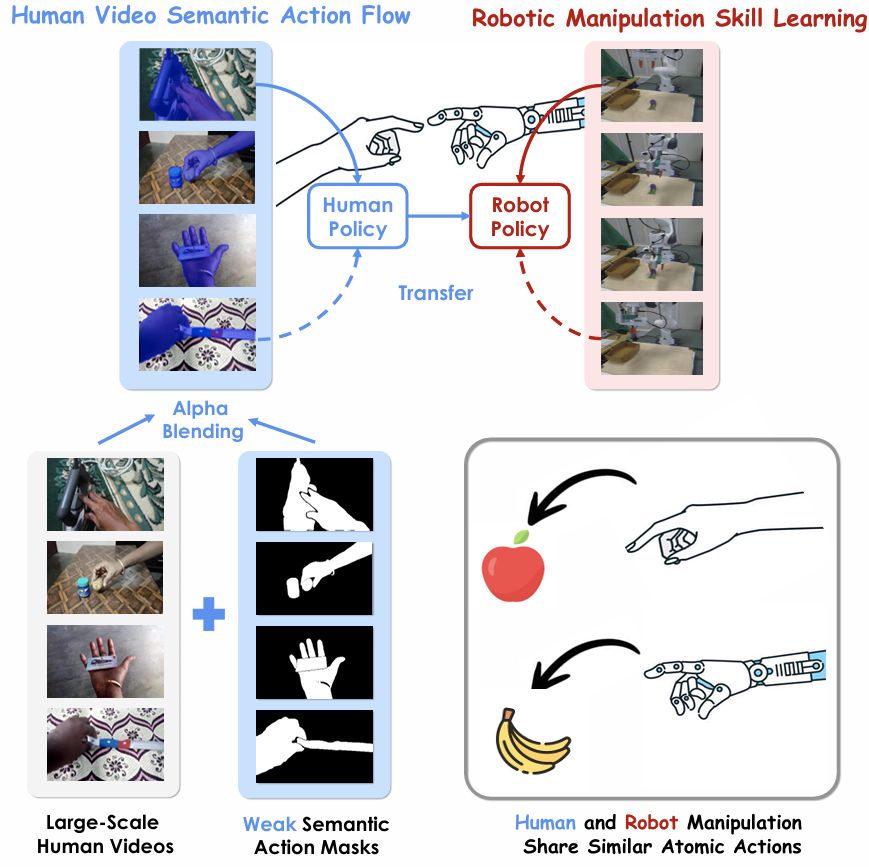

Developed diffusion-policy methods to enhance language steerability and inference speed in robot skill learning.

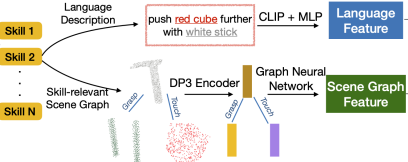

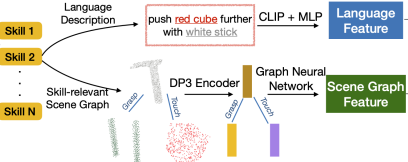

Explored scene graph-based visuomotor policies integrating GNNs and diffusion learning for robust, long-horizon skill composition under visual distractors.

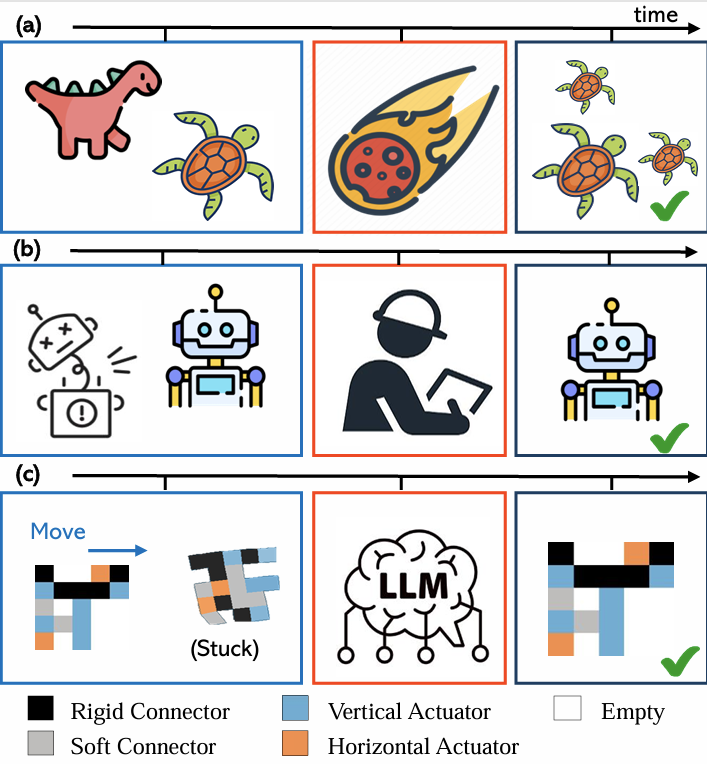

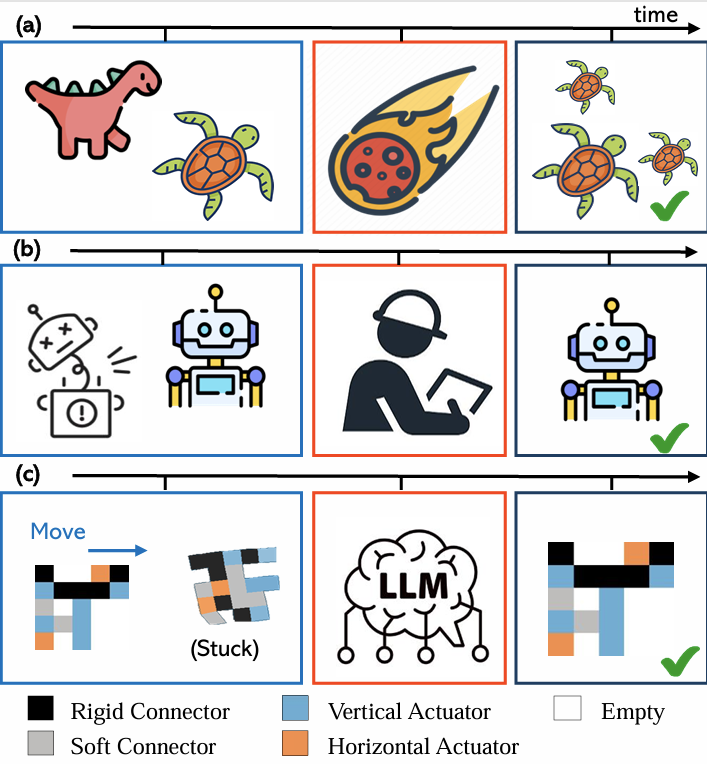

Developed a data generation pipeline in Evogym for evaluating robotic structures.

Utilized large language models (LLMs) to optimize robotic structures through AI insights.

Enhanced robotic simulation workflows by combining AI-driven evaluations with structured data generation for improved design and performance analysis.

Designed a robot task-learning system integrating multi-modal VLMs for improved task execution.

Implemented instruction-based semantic segmentation to enhance robot perception.

Led a team to develop an RL algorithm optimizing circuit parameters using Cadence and TSMC 65nm PDK.

Used RGCN and DDPG to encode circuit topology and optimize design cycles by 80%.

Multi-Agents RL Simulation Platform “SMARTS”

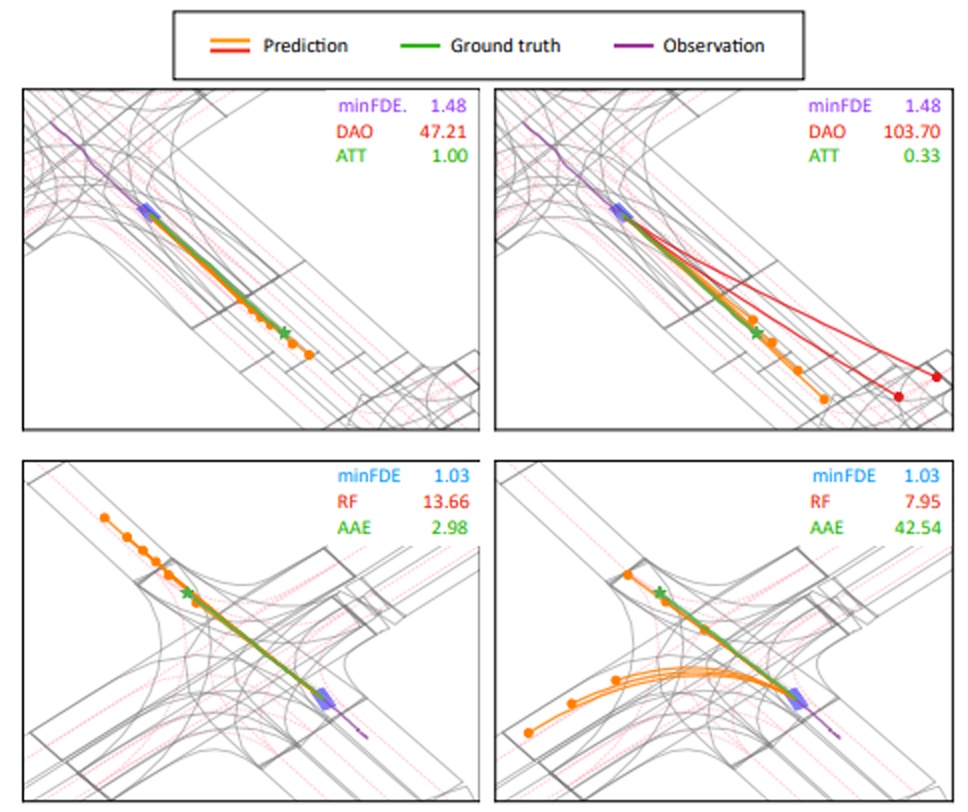

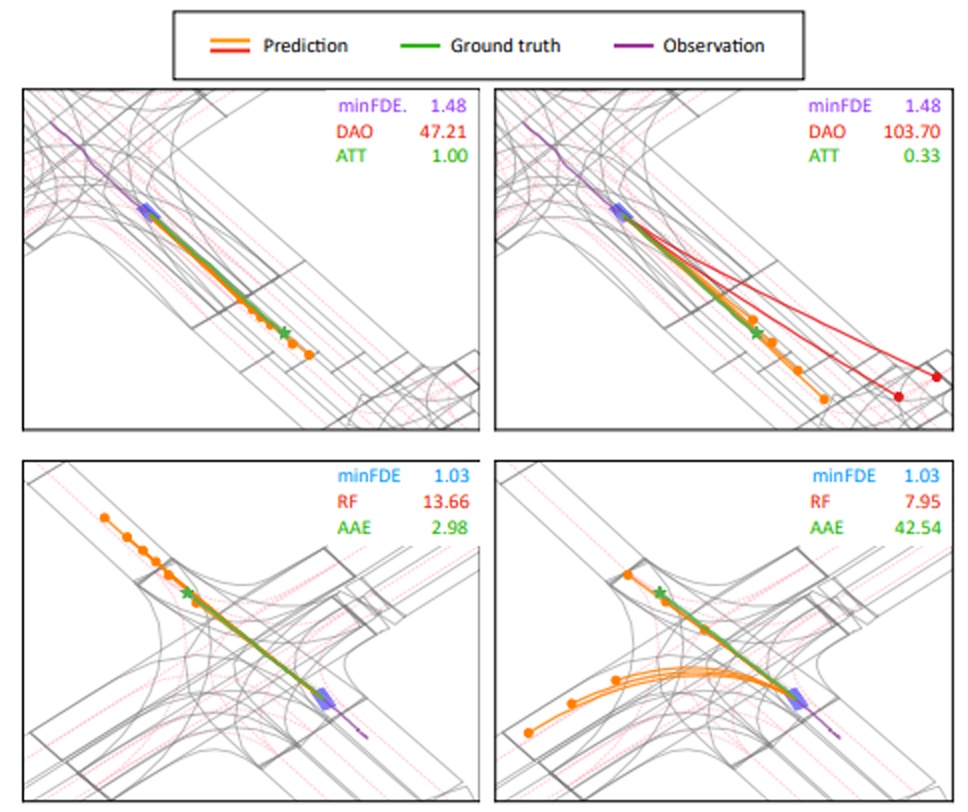

Autonomous Driving Trajectory Prediction Models

Designed radar-based autonomous vehicle systems; 1st place in SAE Autodrive Challenge II.

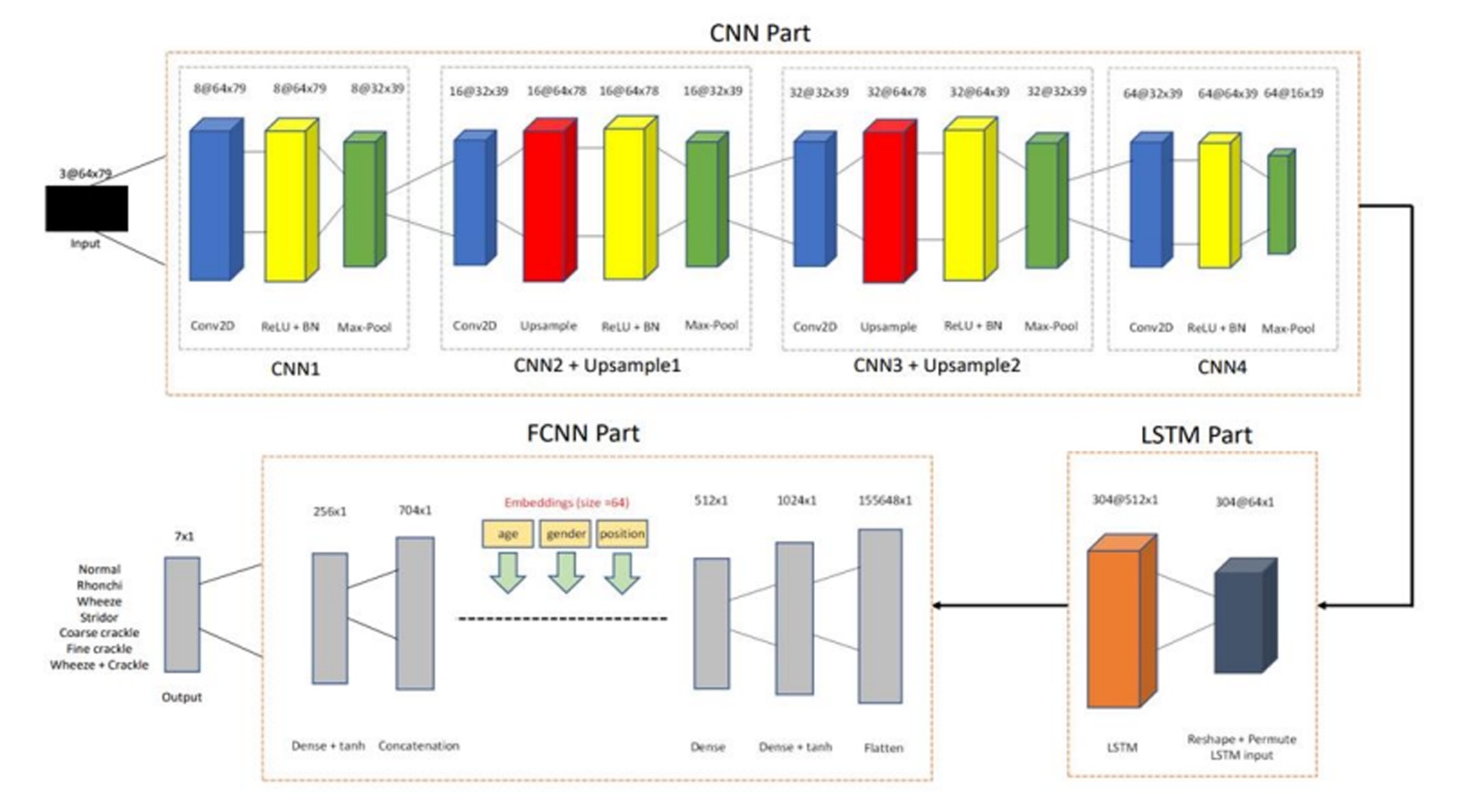

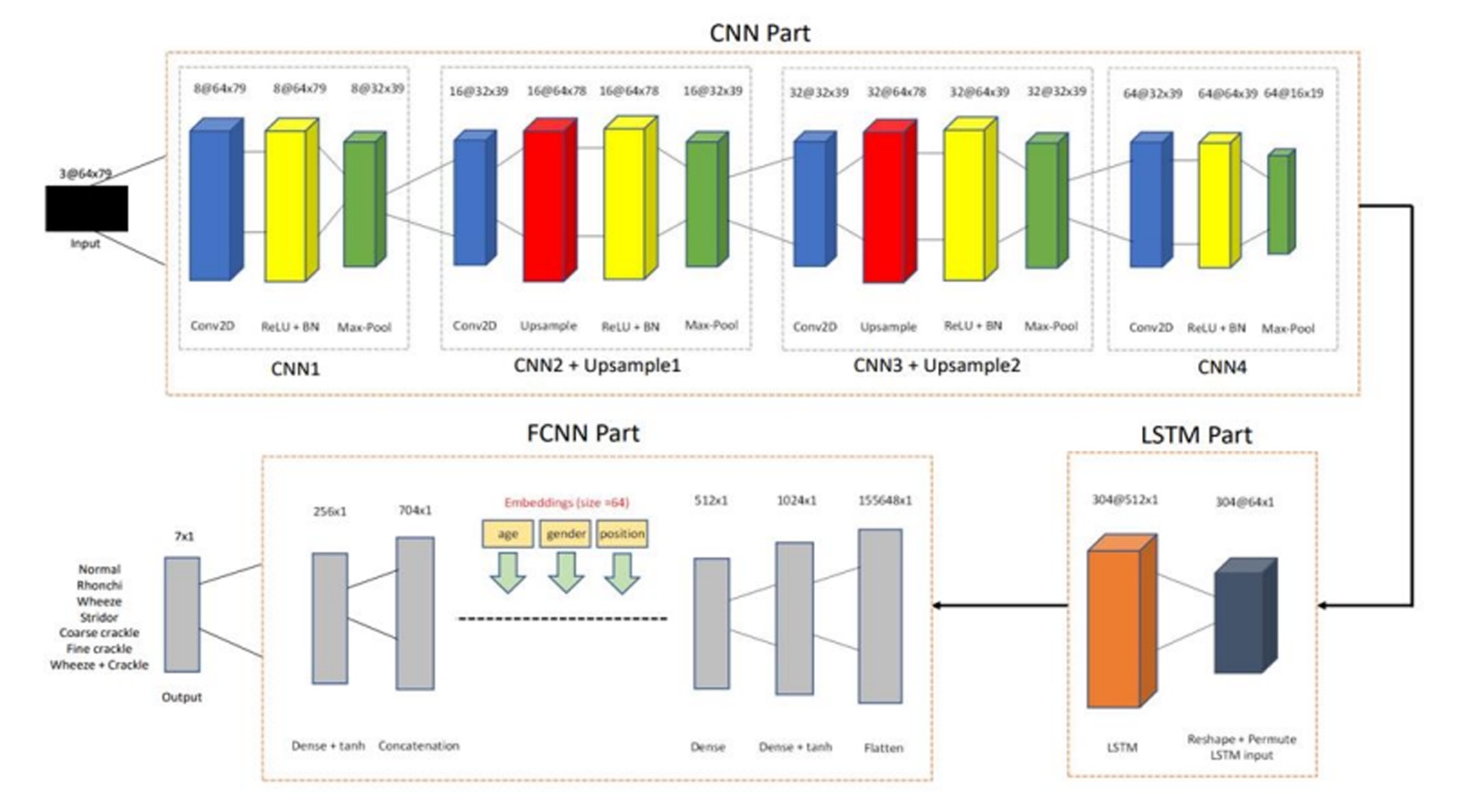

Led a team to developed an ML-based respiratory sound classification model, reaching 75% accuracy.

Led a team to built a C++ navigation app using OpenStreetMap API with a responsive UI.

Programming: Python (PyTorch, TensorFlow), C/C++, MATLAB, Java, Verilog, ARM Assembly

Software Tools: ROS1/2, RL (DDPG, RGCN), SMARTS Simulation, Deep Graph Library

Email: changhec@umich.edu

GitHub: github.com/AisenGinn